What is a Data Pipeline?

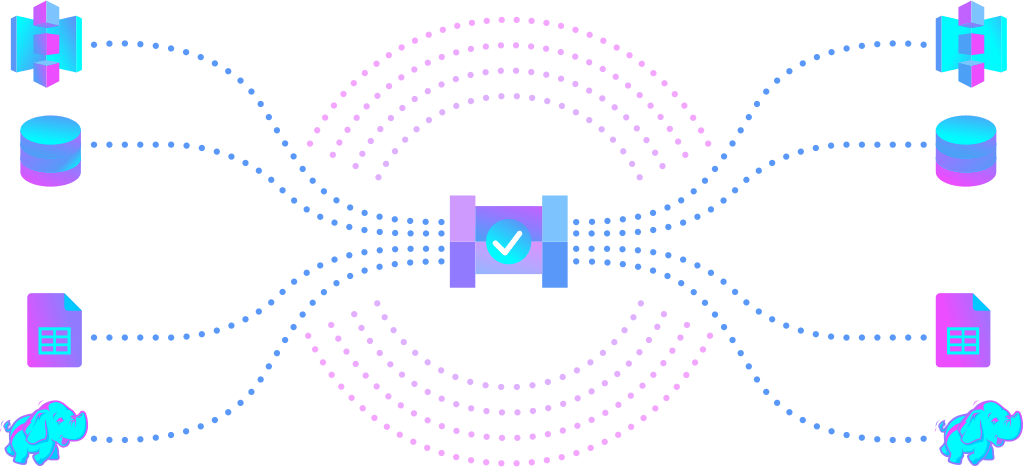

A data pipeline is a computing practice where one or multiple datasets are modified through a series of chronological steps. The steps are typically sequential each feeding the next with their amended version of the dataset. Once the data has been through all the steps the pipeline is complete and the resultant data is then written (sent) to a new destination.

The source

All data pipelines need a source of one or multiple datasets. These sources can be simple .csv files, databases, or (with ever-growing usage) cloud-based storage platforms. The source plugs into the pipeline at the beginning and either the entirety of its contents or a subset of its contents are brought forward into the pipeline steps.

The steps

Steps can be very simple or very complex, some may require deep logic and high computational power whilst others require, in comparison, very minimal resource. The key benefit to building steps is that they can be changed independently from each other, optimised individually to improve the pipeline as a whole.

The destination

Much like the source, the destination is a store of data, often similar to or the same type as the source. The destination is often a larger, aggregated dataset made of up the combined outputs of multiple pipelines and other processes. The destination can be leveraged as a source of data itself feeding newly ‘pipelined’ data into further, deeper pipelines involving processes like statistical modelling and machine learning.

There are no limits to how many pipelines data can go through, the same data can be split through multiple pipelines simultaneously, in parallel, each extracting their own unique insight before being joined back together to create new intelligence for more processing, reporting, or feeding applications.

An example

Within eCommerce companies, data pipelines are very common. In this example, the objective of the sales & marketing team is to understand what digital marketing activity is driving the highest value customers over the last quarter. The company has three separate systems: Google Analytics, Customer Database (CRM), and a Data Warehouse. The team decides to build two sequential data pipelines.

The first data pipeline draws on both Google Analytics and the Customer Database.

Step 1: The web data and the customer data are merged from their two separate datasets into one new table, matching customer IDs captured by Google Analytics with the same customer ID from the CRM.

Step 2: Customers who have no discernable marketing source or campaign attributed to their purchases are removed from the new dataset.

Step 3: Customers who made a purchase but ended up getting a refund that resulted in zero or negative revenue for the company are also removed.

The final ‘purchaser’ dataset with customers and their attributed marketing channels, sources, campaigns are then sent to the Data Warehouse.

The second data pipeline is one of a number of statistical models that will be run on the newly created ‘purchaser’ dataset from the Data Warehouse.

Step 1: Purchasers are run through a simple linear regression model with the ‘customer_revenue’ being examined against the session source, campaign, and demographic factors.

The model data is then pushed back into the Data Warehouse where the mean outputs of the model can be explored by analysts who use the insight to segment and target new prospects and refine their existing customer definitions.

A similar series of pipelines and steps could be used by a wealth management firm to find their customer’s most profitable assets or by a software company to find the most common features used by their most active users.

Big Data Pipelines

The three V’s of Big Data, volume, variety, and velocity bring all sorts of opportunities for data pipelines that come with their own demands on hardware and software alike.

Today, big data analytics engines such as Apache Spark tackle the scaling challenges of volume by distributing large datasets across hardware more efficiently than ever before; allowing even modest servers and PCs to compute truly massive datasets relatively quickly.

Outside a need for better-performing applications, volume of big data has not affected traditional processes nearly as much as the variety and velocity of big data that are pushing for and enabling new heights in data pipeline processing.

The traditional rows and columns of structured data are now becoming increasingly match with unstructured and semi-structured. These new varieties of data introduce complex, multi-relational datasets that quite literally add additional dimensions to the intelligence that can be gathered from step to step.

The new velocities of data refers to a growing demand for real-time analytics. Streaming data is just one method data pipelines have developed to meet the need for real-time data flows. Traditionally, data pipelines run ‘jobs’ at set intervals either triggered manually or automatically using a scheduler. In comparison, data streaming means that new additions to the source data are instantly fed through the pipeline to the destination then leveraged in some way, feeding anything from personalized user interfaces to weather and financial forecasting models.

What does ETL mean?

ETL is a term familiar to techies but hasn’t much resonance in the marketing or wider analytics spheres. The term ETL stands for “extract, transform, load” referring to the movement of data as it’s ‘extracted’ from one system ‘transformed’ (modified) then sent, ‘loaded’ into a destination. Circumstantially a lot of common data pipelines could be referred to as ETL processes though their purpose is often far more analytical, seeking intelligence not just the movement and cleansing of data.

In conclusion.

Data pipelines are fast evolving. However, traditional practices are as popular as ever and, with help from new technologies, have remained unfazed despite the introduction of big data. Most companies employing data pipelines aren’t yet using truly 'big data’ but that doesn’t mean they can’t enjoy the speed and power of systems built for more ambitious processing once thought improbable.