Define name for output files in AWS S3

By default, partition files output by your data pipelines are named dynamically. This tutorial shows you how to predefine a fixed name.

Data Pipelines' underlying analytics engine Apache Spark processes data in a distributed manner (spread across several machines) in parallel. Each machine delivers its output separately as a partition file. These files are collected and output to the AWS S3 destination defined in your data pipeline.

By default, each one of the partition files will be named dynamically and each will have a random name, for example part-00000-6f712e1e-0064-4113-bb63-017ba22b4252-c000.csv.

In most cases the output data will be further processed by some other software or process which may need to know the filename in advance. It is possible to define the output filename for your data pipeline. Note, that this option is only available when the partition files are coalesced (merged together) so there is only a single output file.

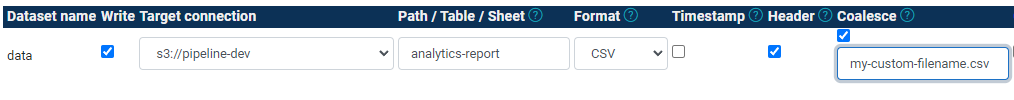

To define a name for the output file when scheduling a pipeline, check the 'coalesce' option and enter a name for your file, including the file extension.

my-custom-filename.csvThe naming option is available to file based outputs only (CSV, JSON, etc.).

Please note that coalescing partition files into a single file may affect performance and may cause Out Of Memory error if the size of the coalesced file is larger than the available memory on a single driver instance (the machine that collects the partition files).

To learn more about how to schedule your pipelines, read our tutorial on How to Schedule Your Data Pipeline.